Your CLAUDE.md Doesn't Work (Without Context Engineering)

“Context engineering over prompt engineering” - Andrej Karpathy

You’ve watched all the Claude Code tutorials.

You’ve created your shiny CLAUDE.md with good architecture and design rules. Time to code!

But after Claude Code makes changes for a while, it starts forgetting your rules and no longer develops as you need.

The problem isn’t your rules. The problem is you’re not controlling the context.

Why Having a CLAUDE.md Isn’t Enough

My CLAUDE.md defines clear and solid principles. But there are two problems:

1. Claude Code can ignore your CLAUDE.md

As explained by HumanLayer in their article about CLAUDE.md, Claude Code injects a system reminder that explicitly states:

<system-reminder>

IMPORTANT: this context may or may not be relevant to your tasks.

You should not respond to this context unless it is highly relevant to your task.

</system-reminder>

Claude will ignore your CLAUDE.md if it decides it’s not relevant to the current task. The more specific instructions or temporary “hotfixes” you have, the more likely it is to ignore them.

Solution: Keep only universally applicable principles. Avoid context-specific instructions.

2. The best rules don’t work with too much context

What matters isn’t context windows, it’s attention windows

LLM manufacturers announce huge context windows. Claude 3.5 Sonnet accepts 200k tokens. Gemini 1 million. Sounds impressive.

But you need to differentiate between how much context they accept (the context window) and how much context they can effectively process (the attention window).

Context window vs attention window

Manufacturers don’t publish information about their attention windows to avoid revealing implementation details of their architectures. But the community has tried to find the attention window size empirically. Testing, testing, and testing. All these tests demonstrate that the attention window is smaller than the context window.

| Model | Context Window | Attention Window | Source |

|---|---|---|---|

| GPT-4 Turbo | 128k tokens | ~64k tokens (50%) | RULER Benchmark |

| Claude 3/3.5 | 200k tokens | No data found | Anthropic: “LLMs lose focus with long contexts” |

| LLaMA 3.1 | 128k tokens | ~32k tokens (25%) | Databricks RAG Evaluations |

| Mistral 7B | 32k tokens | ~16k effective (50%) | RULER Benchmark |

Tests show that after 50-60% of the context window, accuracy drops 20-50% depending on the model.

After several months working with Claude Code, my typical workflow was:

- Half an hour of spectacular productivity, Claude works flawlessly following my

CLAUDE.md - 45 minutes later: “Claude, not like that, remember your

CLAUDE.md”. But it starts ignoring my design rules and project context: Why is it creating a new service if I asked it to use the existing one? Why hasn’t it implemented tests? - 1 hour later: Claude Code will automatically compact the context. Will I stay above 60%? Do I

/clearand explain everything again? Do I continue like this and risk it continuing to ignore my rules? - 2 weeks later: “Where did I save that authentication research from last week?” -> I have to ask for it again from scratch.

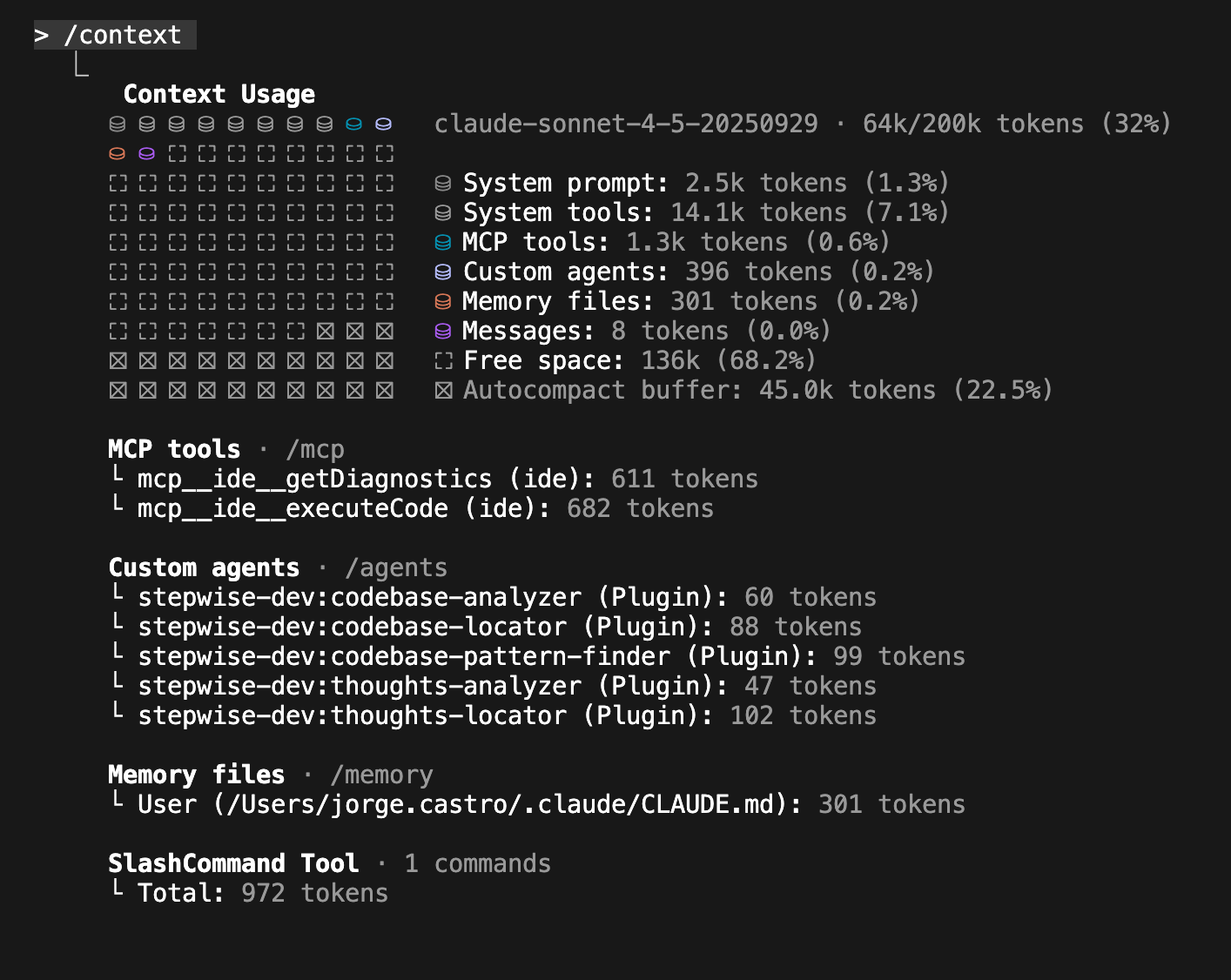

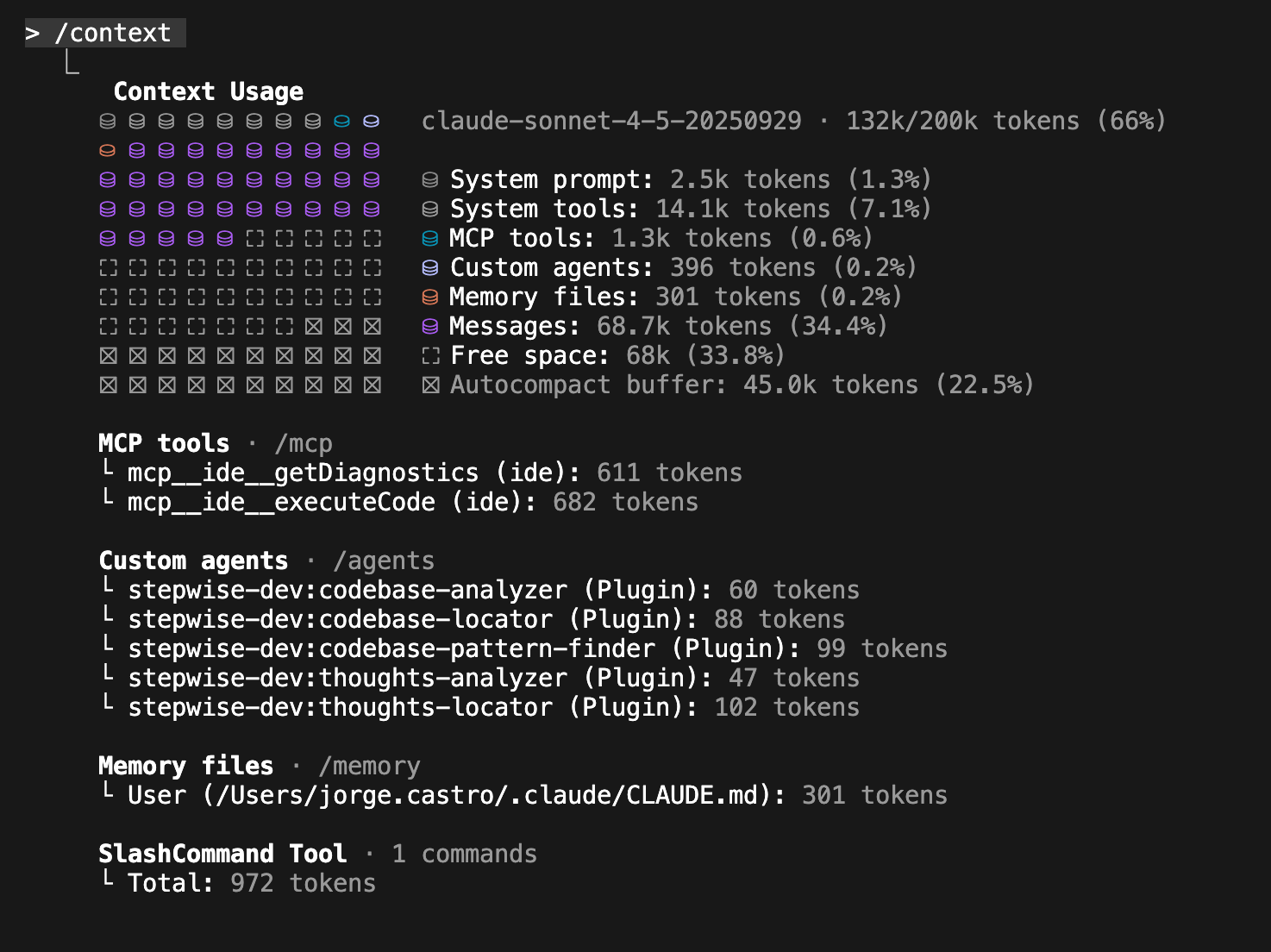

This is my Claude Code context in a new, clean session. It starts at 32% 😱:

Then I found the “Frequent Intentional Compaction” (FIC) framework developed by Dex Horthy and HumanLayer. This framework proposes a structured workflow in phases (Research -> Plan -> Implement -> Validate) to keep context controlled. I created the stepwise-dev plugin to automate and implement this FIC workflow in Claude Code, keeping context below 60% systematically.

The FIC Framework (Frequent Intentional Compaction)

As Dex Horthy explains in his talk about Context Engineering, the context problem is solved with a structured workflow:

“The key is to separate research, planning, and implementation into distinct phases with frequent intentional compaction.”

The FIC framework proposes 4 independent phases:

- Research: Investigation without implementation

- Plan: Iterative design before code

- Implement: Execution by phases

- Validate: Systematic verification

Between each phase, intentional context cleanup (/clear) is done, but knowledge persists in structured files.

Stepwise-dev automates this FIC workflow, providing specific commands for each phase and automatically managing knowledge persistence in the thoughts/ directory.

How does stepwise-dev implement the FIC framework?

The solution isn’t writing better prompts. It’s structuring your workflow to keep context controlled.

Stepwise-dev implements the 4 FIC phases through specific commands. Each phase starts with clean context.

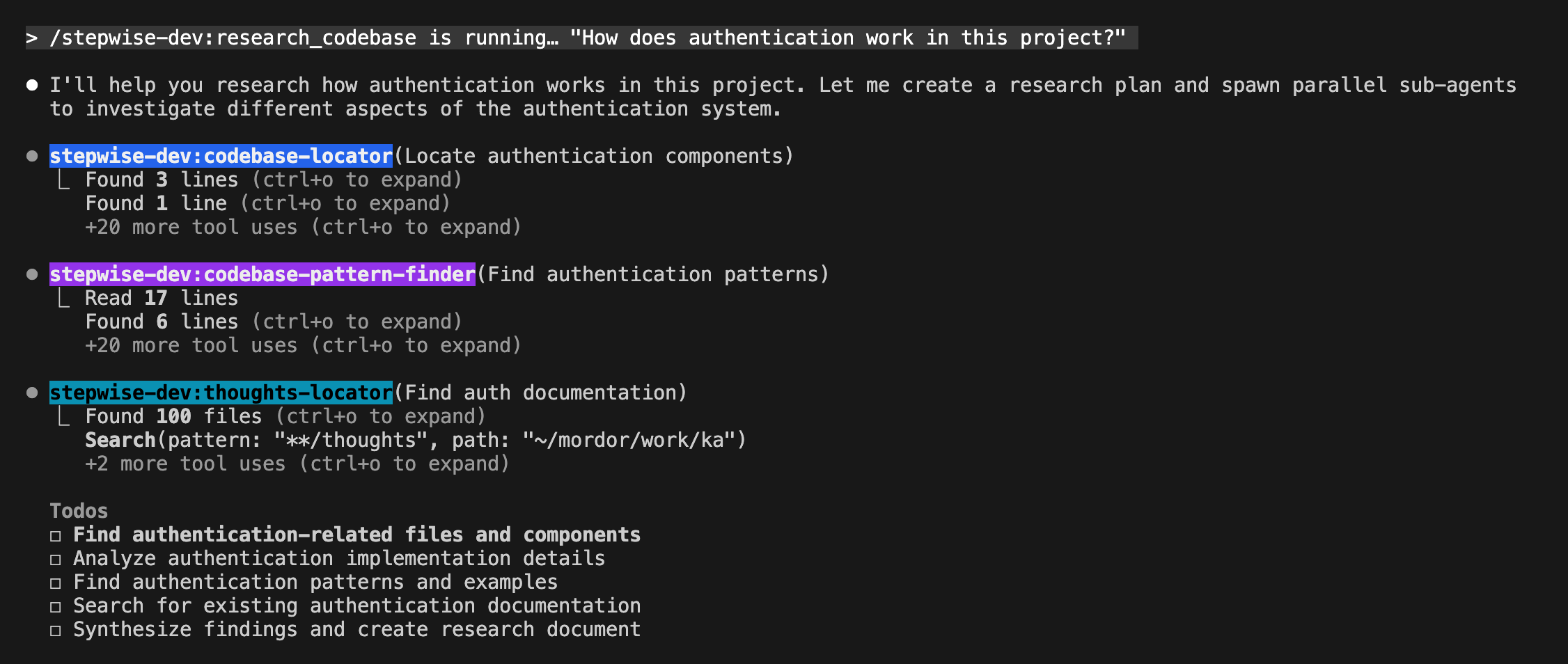

Research: Investigate without implementing (/stepwise-dev:research_codebase)

The problem: you ask Claude “investigate how X works”. Claude loads 30 files, analyzes everything, and you end up with 65% context filled with information you no longer need.

/stepwise-dev:research_codebase "How does authentication work in this project?"

/clear

stepwise-dev launches up to 5 specialized agents in parallel (codebase-locator, codebase-analyzer, pattern-finder…) and generates a document in thoughts/shared/research/.

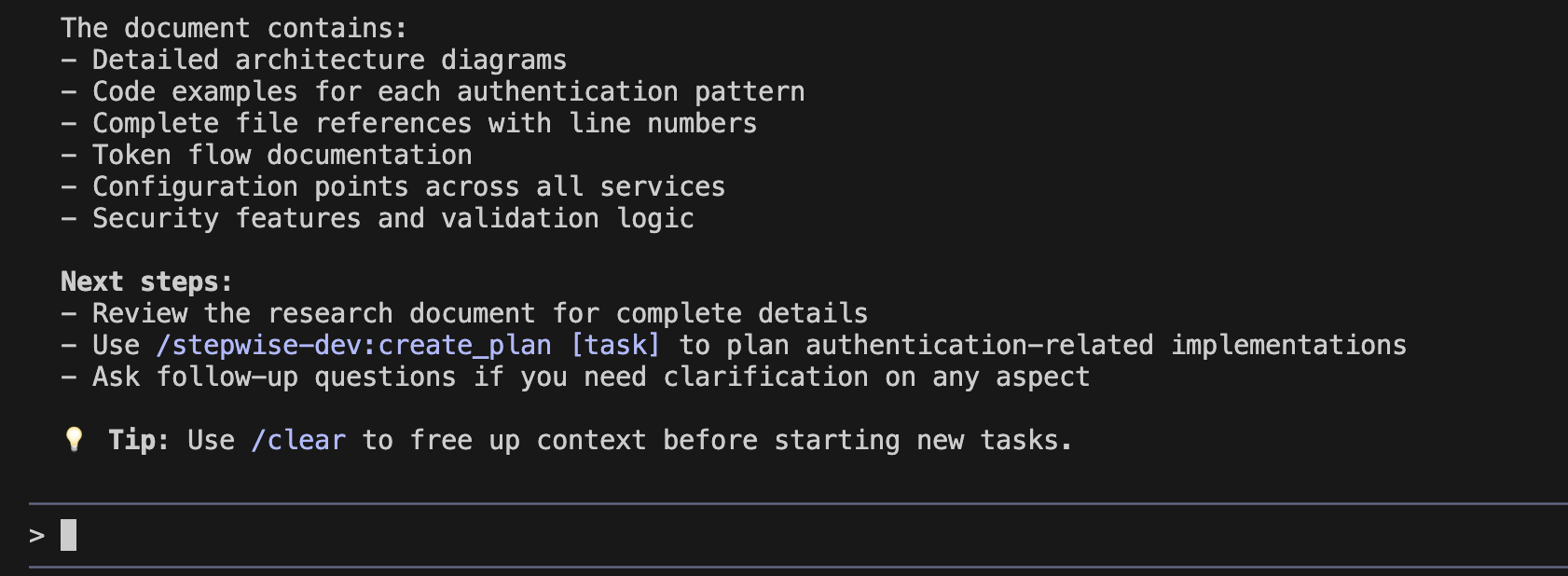

When stepwise-dev finishes the research, it guides you on the next steps (the other commands do this too).

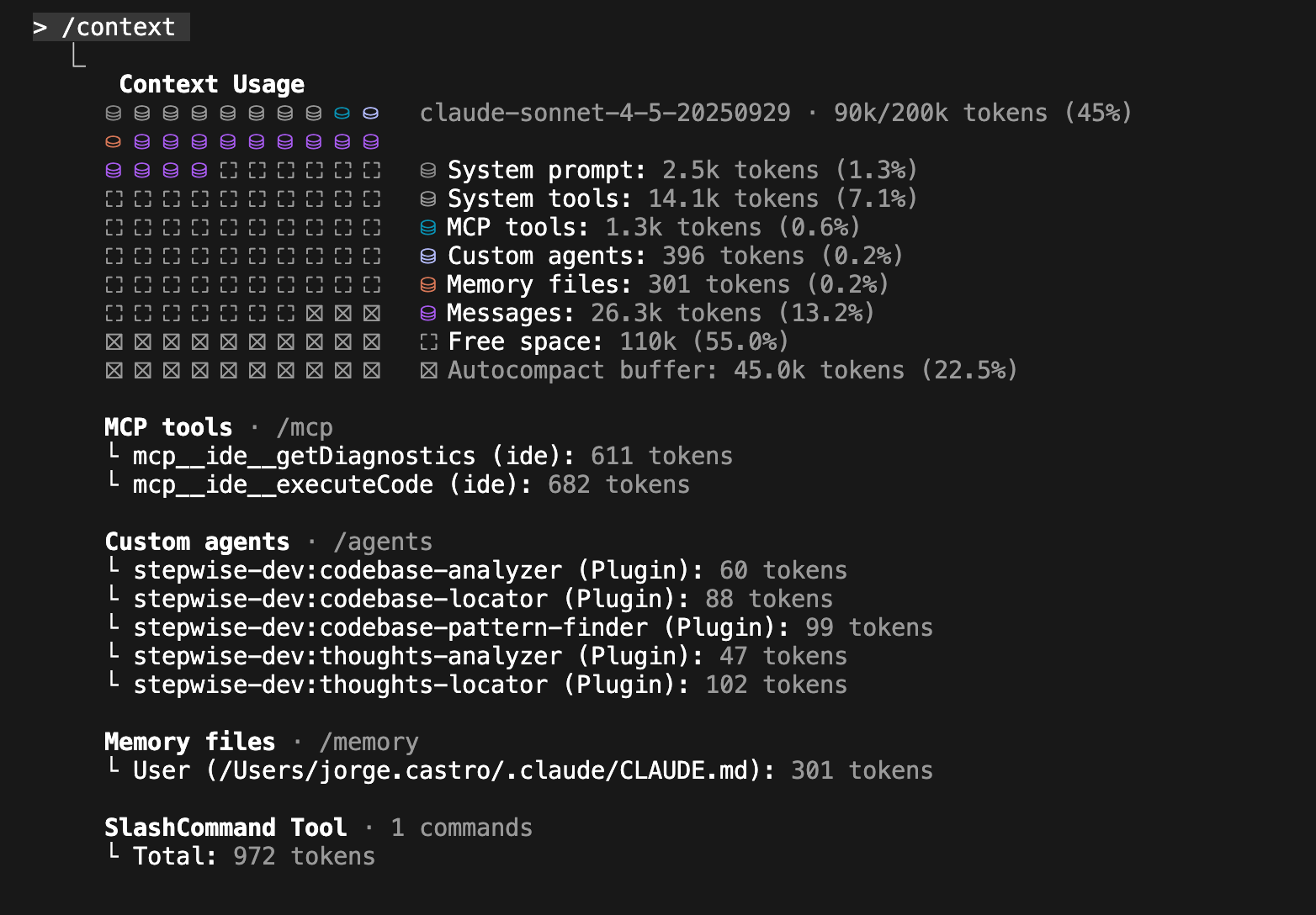

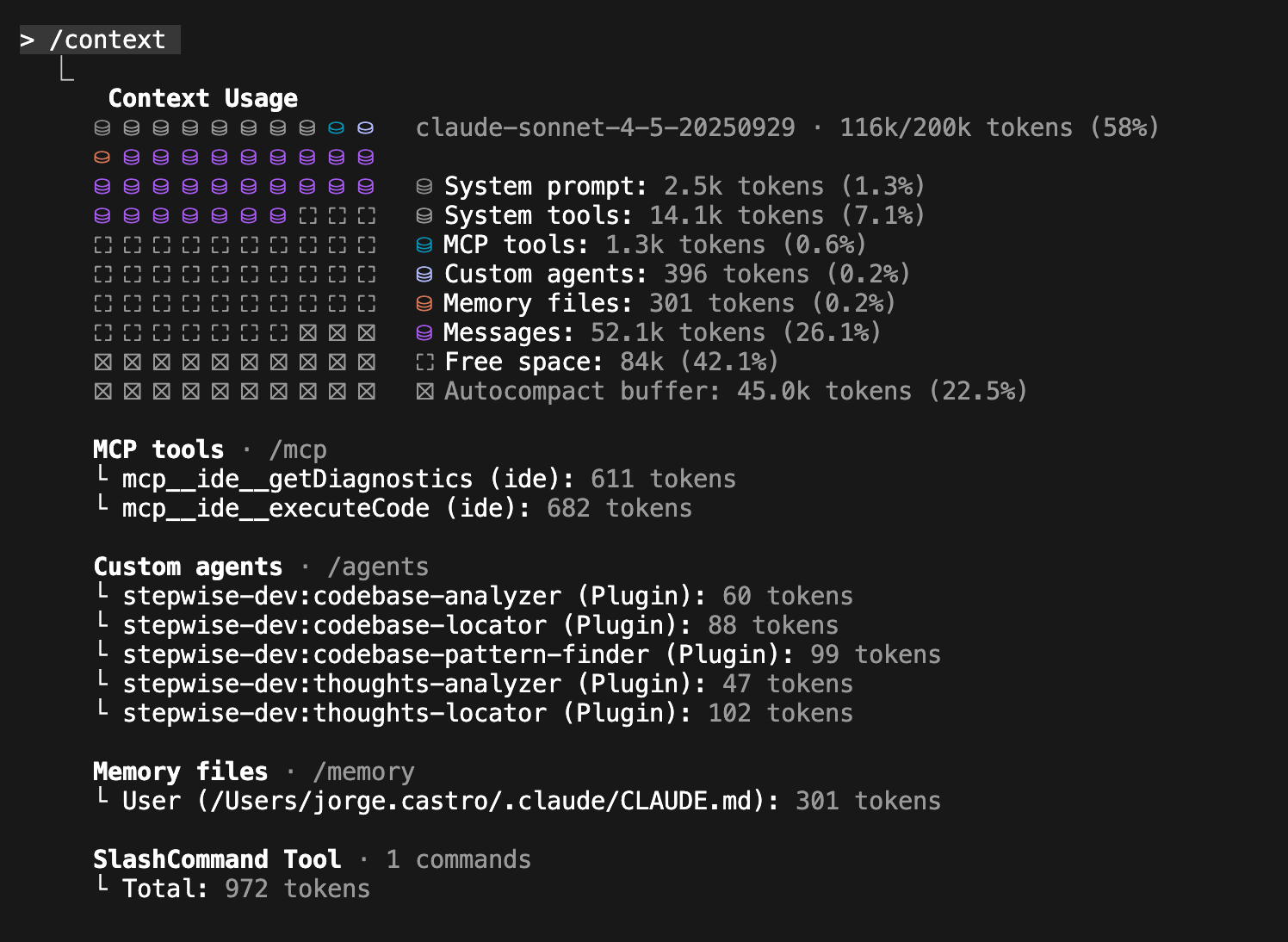

This is my context after conducting research in a folder with 7 projects that interact with each other but are implemented with different technologies (Astro, Java, Python):

After /clear, persistent knowledge. Clean context.

Plan: Design before implementing (/stepwise-dev:create_plan)

As Dex Horthy says in Context Engineering SF: Advanced Context Engineering for Agents:

“A bad line of code is… a bad line of code. But a bad line of a plan could lead to hundreds of bad lines of code.”

Reviewing 200 lines of plan is easier than reviewing 2000 lines of code.

/stepwise-dev:create_plan @thoughts/shared/research/2025-11-15-auth.md Add authentication between services

/clear

# Optional: iterate the plan

/stepwise-dev:iterate_plan @thoughts/shared/plans/2025-11-15-auth.md Add support for token refresh and expiration handling

/clear

Claude creates a structured plan in phases. You iterate as many times as you want until the plan is solid.

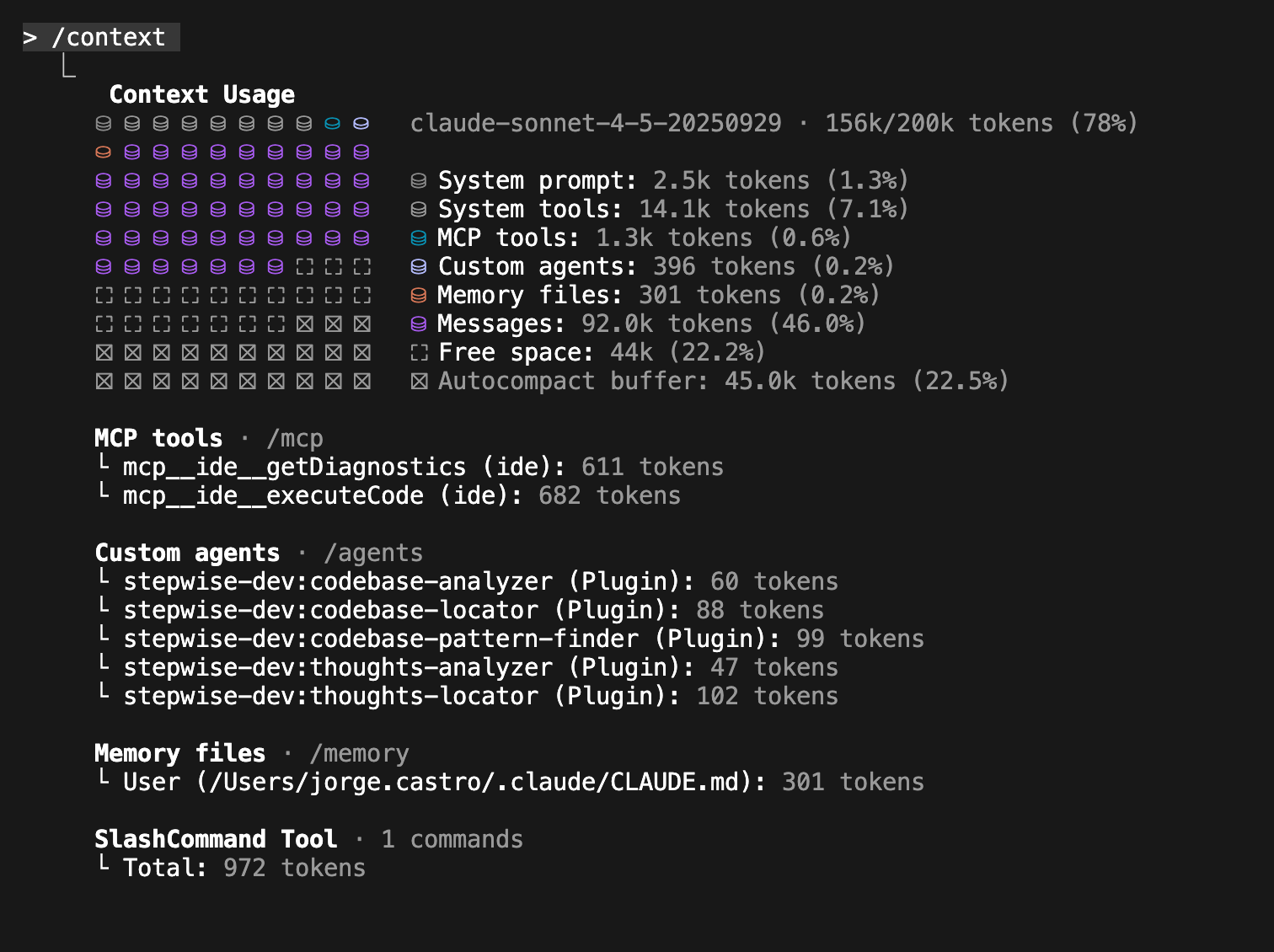

Remember that I launched /create_plan in a folder with 7 projects that interact with each other but are implemented with different technologies (Astro, Java, Python):

Implement: Implement phases one at a time (/stepwise-dev:implement_plan)

Stepwise-dev allows you to implement the complete plan. The problem is that the context will likely grow beyond 60%.

That’s why it’s important to execute it in phases:

/stepwise-dev:implement_plan @thoughts/shared/plans/2025-11-15-oauth.md Phase 1 only

/clear

Claude Code reads the complete plan, implements only ONE phase, runs tests, and waits for your confirmation.

/stepwise-dev:implement_plan @thoughts/shared/plans/2025-11-15-oauth.md Phase 2 only

/clear

Result: Context never exceeds 60% in small/medium projects and stays very close to 60% in large projects. The code is coherent because each phase has clean context.

Validate: Verify systematically (/stepwise-dev:validate_plan)

/stepwise-dev:validate_plan @thoughts/shared/plans/2025-11-15-oauth.md

/clear

Claude verifies everything is implemented: all phases completed, tests passing, code matches plan, no undocumented deviations.

Want to try it?

Install stepwise-dev and use it in your next work session.

# In Claude Code

/plugin marketplace add nikeyes/stepwise-dev

/plugin install stepwise-dev@stepwise-dev

# Restart Claude Code

What really changes with Stepwise-dev

I used Claude Code for months before creating stepwise-dev.

The problem wasn’t knowing how to write maintainable code with Claude Code. The problem was managing context well.

The fundamental difference is the thoughts/ directory:

The /thoughts folder is an external brain for Claude Code. Each phase produces reusable knowledge for Claude Code, its agents, other sessions, or humans.

With stepwise-dev:

- Research -> Saved in

thoughts/shared/research/->/clearwithout fear - Plans -> Saved in

thoughts/shared/plans/-> Iterative design without filling context - Implement -> Reference the plan -> Context always < 60%

- Validate -> Compare against plan -> Systematic verification

What it solves:

- “Where did I save that info?” -> Everything in

thoughts/shared/, always accessible - “Why did we decide this?” -> Each decision has its associated research or plan

- “New to the team” -> Read

shared/and understand the project - “Research again” -> If it’s in

thoughts/, no need to re-research

But above all, now Claude Code follows your CLAUDE.md consistently because the context never fills up.

You won’t go faster, but now you have automatic context control in your workflow.

References

About Context Engineering

- Advanced Context Engineering for Coding Agents - Dex Horthy

- Frequent Intentional Compaction - HumanLayer

- Writing a Good CLAUDE.md - HumanLayer

- I Mastered the Claude Code Workflow - Ashley Ha

- Context Engineering SF: Advanced Context Engineering for Agents

- Avoiding Skill Atrophy in the Age of AI

- Effective context engineering for AI agents - Anthropic

- 12-Factor Agents - Own your context window